A Development of the Standard English Listening Test of Pibulsongkram Rajabhat University

DOI:

https://doi.org/10.14456/psruhss.2024.34Keywords:

Standardized test, English listening ability, Item response theory (IRT)Abstract

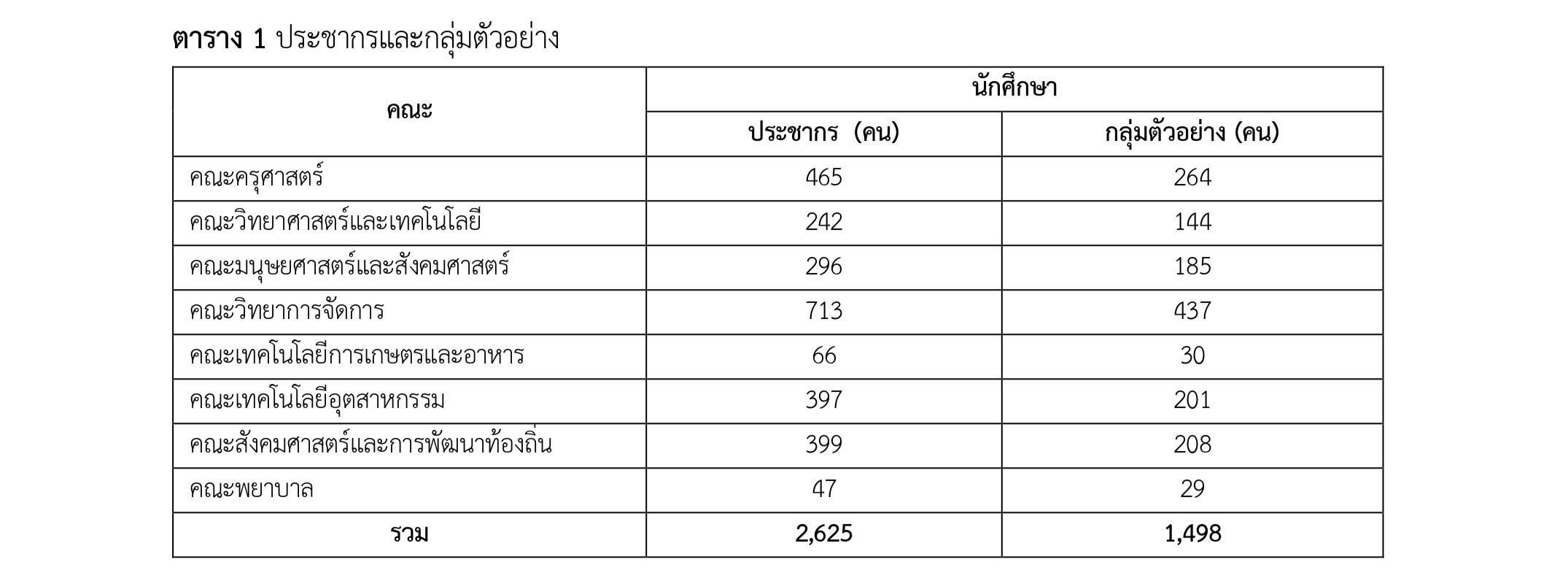

The purposes of this research were as follows: 1) to develop a standard English listening test for students at Pibulsongkram Rajabhat University and 2) to assess the quality of the standard English listening test for students at Pibulsongkram Rajabhat University. The sample group consisted of 1,498 third-year students in the academic year of 2564 from 8 faculties. The research instrument comprised two sets of standard English listening test items, totaling 100 items each and 200 items overall. Additionally, the standard English listening test was analyzed using the Item Response Theory method. Statistical analysis included percentage, mean, standard deviation, difficulty index, discrimination power, guessing, and test reliability. The research findings indicated that:

- The two sets of the standard English listening test for Pibulsongkram Rajabhat University are divided into four sections with a total of 100 items each. High-frequency vocabulary, useful for learners and recommended for prior learning, was included in the test by selecting 95% of the most frequently used words. The Index of Consonance (IOC) is 0.99, and the Cronbach's Alpha coefficient for both versions is 0.904.

- The quality assessment of the standard English listening test for Pibulsongkram Rajabhat University, based on the Item Response Theory (IRT) method, revealed that Set 1 had item difficulty scores ranging from 0.70 to 2.47, a discrimination power (b) ranging from -2.23 to 1.62, and distractor functionality ranging from 0.00 to 0.26. Meanwhile, Set 2 had item difficulty scores ranging from 0.31 to 2.48, discrimination power (a) ranging from -1.47 to 2.62, and guessing ranging from 0.00 to 0.29. A total of 56 items in Set 1 and 76 items in Set 2 passed the IRT criteria. The overall number of qualified test items from both sets is 132.

References

ณัฐกานต์ เส็งชื่น. (2566). การศึกษาคลังข้อมูลคำศัพท์ในแนวข้อสอบ TOEIC เพื่อการพัฒนารายการคำศัพท์สำหรับการสอบฟัง. สักทอง: วารสารมนุษยศาสตร์และสังคมศาสตร์, 29(1), 74-90.

เบญจมาภรณ์ เสนารัตน์, บุญชม ศรีสะอาด และจริยา ภักตราจันทร์. (2559). การพัฒนาเครื่องมือประเมินความสามารถทางการวิจัยการศึกษาของนักศึกษาครูมหาวิทยาลัยราชภัฏในภาคตะวันออกเฉียงเหนือ. วารสารการวัดการศึกษา มหาวิทยาลัยมหาสารคาม, 22(1), 164-182.

ศิริชัย กาญจนวาสี. (2563). ทฤษฎีการทดสอบแนวใหม่ (พิมพ์ครั้งที่ 5). กรุงเทพฯ: โรงพิมพ์แห่งจุฬาลงกรณ์มหาวิทยาลัย.

สำนักงานคณะกรรมการส่งเสริมวิทยาศาสตร์ วิจัยและนวัตกรรม (สกสว.). (2562). เอกสารประกอบการเสวนาโครงการวิจัย "ปัญหาความสามารถในการใช้ภาษาอังกฤษของคนไทย: กลยุทธ์และนวัตกรรมในการแก้ปัญหา" วันอาทิตย์ที่ 6 ตุลาคม 2562 ณ โรงแรมเอทัส ลุมพินี.

A Glossary of Measurement Terms Eric Digest. (1989). American Institutes for Research Washington DC. Retrieved May 10, 2023, from http://ericae.net/digests/ed315430 ERIC Clearinghouse on Tests Measurement and Evaluation Washington DC.

Bachman, L. F., & Palmer, A. S. (1996). Language Testing in Practice: Designing and Developing Useful Language Tests. (86-89). Oxford: Oxford University Press.

Bachman, L. F., & Palmer, A. S. (2010). Language assessment in practice. Oxford: Oxford University Press.

Brown, H. D. (2004). Language Assessment: Principle and Classroom Practices. New York: Pearson Education.

Chen, L. (2009). Assessing the Test Usefulness: A Comparison Between the Old and the New College English Test Band 4 (CET-4) in China. Retrieved May 2, 2023 from http://www.diva-portal.org/smash/get/diva2:228454/FULLTEXT01.pdf

Education First. (2020). EF English Proficiency Index: A Ranking of 100 Countries and Regions by English Skills. Retrieved April 29, 2023 from https://www.ef.com/wwen/epi/

Genesee, F., & Upshur, J. A. (1996). Classroom-based evaluation in second language education. Cambridge: Cambridge University Press.

Laufer, B. (1989). What Percentage of Text-Lexis Is Essential for Comprehension? In: Lauren, C. and Nordman, M., Eds., Special Language: From Human Thinking to Thinking Machines, Multilingual Matters, Clevedon, 316-323.

Nitko, A. J. (1996). Educational assessment of students. Prentice-Hall Order Processing Center, PO Box 11071, Des Moines, IA 50336-1071.

Pan, Y.-C. (2009). Evaluating the appropriateness and consequences of test use. Colombian Applied Linguistics Journal, (11), 93–105. https://doi.org/10.14483/22487085.156

Rovinelli, R. J., & Hambleton, R. K. (1977) On the Use of Content Specialists in the Assessment of Criterion-Referenced Test Item Validity. Tijdschrift Voor Onderwijs Research, 2, 49-60.

The European Association for Quality Language Services. (2009). Calibrating tests to the CEFR: A simplified guide for EAQUALS Members on calibrating entry or progress tests to the CEFR. Retrieved April 20, 2023, from http://clients.squareeye.net/uploads/ eaquals2011/ documents/ EAQUALS_Calibrating_tests_to_the_CEFR.pdf

Downloads

Published

How to Cite

Issue

Section

License

Copyright (c) 2024 Humanities and Social Sciences Journal of Pibulsongkram Rajabhat University

This work is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License.

Any articles or comments appearing in the Journal of Humanities and Social Sciences, Rajabhat Phibulsongkram University, are the intellectual property of the authors, and do not necessarily reflect the views of the editorial board. Published articles are copyrighted by the Journal of Humanities and Social Sciences, Rajabhat Phibulsongkram University.